Every game developer knows that sound is more than just background noise—it’s what makes a world feel alive. But let’s be honest, traditional sound packs can be a real pain. You either end up with generic audio that players have heard a million times or blow your budget on a massive library just for a few good sounds. This is a common frustration, and it's why so many of us are now looking at AI-powered tools to build our audio landscapes from the ground up.

Relying on pre-made sound packs often feels like you're compromising right from the start. You're scrolling through endless lists, trying to find a footstep that’s almost right for your character walking on gravel, or a laser blast that doesn't quite have the punch you imagined. It forces you into a "good enough" mindset, which is the last thing you want for your game's audio.

The real problem here is that players notice. They've heard that same stock door creak or monster roar in a dozen other titles. When they hear it in your game, it instantly shatters the immersion. It pulls them right out of the unique world you’ve spent months, or even years, building. Your audio should be as unique as your art style, not an afterthought cobbled together from familiar sounds.

Beyond the creative roadblocks, there are two huge practical issues with stock audio: repetition and cost.

When you purchase a popular sound pack, you’re using the exact same assets as hundreds, maybe thousands, of other developers. Your game's sonic identity gets lost in the noise, making it harder to stand out. It’s a surefire way to sound like everyone else.

Then there's the financial side. How many times have you had to buy a $200 pack just to get three or four specific sounds you actually needed? You end up paying for a mountain of assets that will just sit on your hard drive, gathering digital dust. It’s an inefficient model that drains the budget without delivering the custom-fit audio your project deserves.

The market for sound effects software was valued at around $3.5 billion and is expected to hit $7.1 billion by 2033. This surge shows just how much developers are craving better, more flexible tools for sound design.

This is exactly where AI generation tools like SFX Engine completely change the workflow. Instead of searching for a sound that someone else made, you create the exact sound you hear in your head simply by describing it in plain text.

Want a "heavy metallic clang echoing in a vast cavern"? You can generate it. Need a "squishy footstep in thick mud with a slight sucking sound"? You can get that too.

This shift puts the creative power squarely back with the developer. It opens the door to creating a truly unique and cohesive sound effects library filled with an infinite number of royalty-free sounds. You're not just saving time and money; you're ensuring your game's audio is as original and memorable as its gameplay.

Even with these powerful new tools, it's always good to know all your options. If you're working with a tight budget, you can check out our guide on finding free sound effects for games.

To really see the difference, it helps to put the two approaches side-by-side. The old way of buying static packs has its place, but AI generation offers a level of freedom we've never had before.

| Attribute | Traditional Library | AI-Powered Library |

|---|---|---|

| Creativity | Limited to pre-made sounds | Unlimited; generate anything you can describe |

| Uniqueness | Low; same sounds used by many | High; every sound is newly generated and unique |

| Cost-Effectiveness | Can be poor; pay for unused assets | High; pay for access, generate what you need |

| Workflow | Search, listen, and settle | Describe, generate, and refine |

| Immersion | Risk of breaking immersion with generic sounds | Enhances immersion with bespoke, consistent audio |

Ultimately, choosing AI generation is about taking full control of your game's auditory identity. You move from being a consumer of pre-made assets to a creator of truly custom sound, which can make all the difference in crafting an unforgettable player experience.

Before you generate a single pop, bang, or whoosh, you need a plan. A truly great game sound effects library isn't just a random folder of cool noises. It's a carefully curated set of sounds that all work together to build your game's unique world and reinforce its identity.

Think of it this way: you wouldn't let your artists mix pixel art with photorealistic models. It would be a mess. The same logic applies to sound. Without a clear audio vision, you'll end up with a confusing, disjointed experience that pulls players right out of the game instead of drawing them in. This initial planning is what separates the pros from the amateurs.

This process is deeply connected to core user experience design principles. After all, sound is one of the most powerful tools we have for giving players feedback and immersing them in the world. The goal here is to turn your big, abstract ideas about the game's feel into a concrete list of audio goals.

The best way I’ve found to formalize this vision is with a Sound Design Document. Don't let the name intimidate you; it doesn't need to be a 50-page thesis. It just needs to be a central, shared guide that you and your team can refer back to. It’s your North Star for audio.

For instance, if you're making a gritty tactical shooter, your document might specify "hyper-realistic, sharp weapon cracks" and "minimal, metallic UI clicks." But for a whimsical fantasy RPG, you'd be looking for "ethereal, bell-like chimes" and "lush, layered forest ambiences." Writing this down makes the direction crystal clear.

A strong sonic footprint isn't just a nice-to-have; it's the foundation of your entire audio landscape. It ensures every sound you create serves a purpose, making the difference between random noise and intentional, memorable design.

Your document should outline the main pillars of your game's soundscape. I find it helpful to break it down into a few key categories to keep it organized and actionable.

Thinking through these areas will not only shape your library but also help you write much more effective prompts when you start generating sounds with AI tools later on.

Once you’ve mapped these elements out, you have a solid roadmap. When you fire up your sound generation tool, you won't be just throwing prompts at a wall to see what sticks. You'll be executing a plan, ensuring every single sound effect feels like it truly belongs in your game's world.

Alright, you've got your sonic blueprint mapped out. Now for the fun part: actually making some noise. This is where we shift from planning to creating, using an AI tool like SFX Engine to start generating the raw ingredients for your custom game sound effects library.

The real magic of AI here is how it turns your words into sound. You don't need to know complex audio engineering terms. You just need to be descriptive. The better you can paint a picture with your prompt, the closer the AI will get to the sound you're hearing in your head.

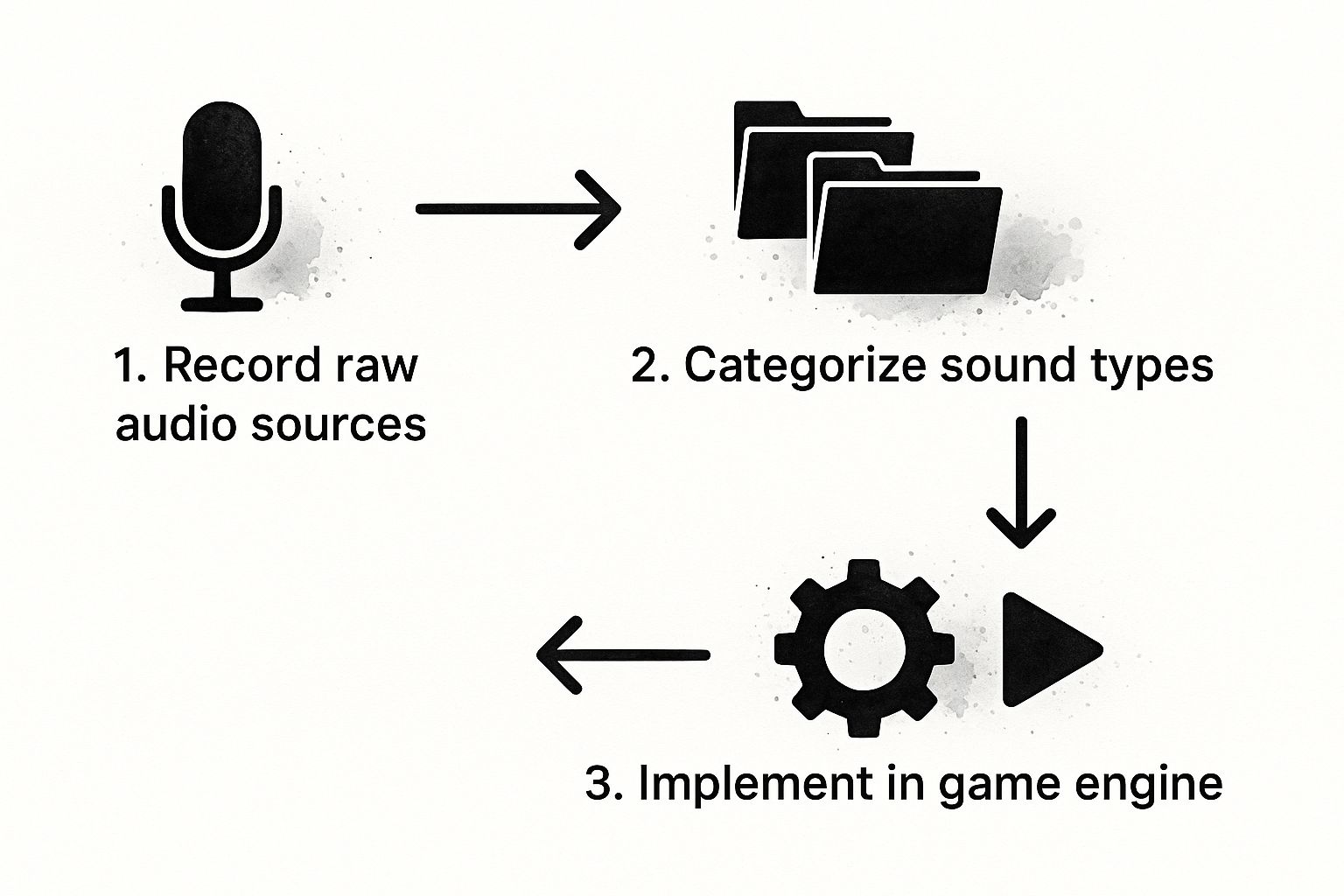

This is a great visual of how the whole process comes together, from that initial idea to the sound actually playing in your game.

As you can see, generating the sound is just the beginning. Organizing and implementing it correctly is what really brings it to life.

Think of writing a prompt as if you're directing a voice actor. "Just say the line" is a bad direction. You'd give them context, motivation, and specifics. It’s the exact same principle with AI sound generation.

Let's use a classic example: a footstep. A simple prompt like "footstep on gravel" will work. You'll get a perfectly usable sound. But we can get so much more detailed, especially when tying it back to our Sound Design Document.

Check out the difference a few words make:

Footstep on gravel.Heavy leather boot crunching on loose gravel.Slow, heavy footstep of a soldier in a leather boot crunching on loose gravel, with small stones scattering.That last prompt isn't just a sound; it's a story. It tells us about the character's weight, their gear, and the environment. That level of detail is what the AI feeds on to create a truly rich and believable sound effect.

I always tell people to think in terms of material, action, and environment. What's it made of? What's it doing? Where is it? Nail those three things in your prompt, and your results will improve instantly.

Getting this right is becoming more and more important. The game sound design market was valued at around $280 million and is on track to hit $680 million by 2033. This isn't just a niche skill anymore; custom, high-quality audio is a core part of what makes a game stand out.

Your first attempt probably won't be perfect. That's not just okay; it's expected. The whole point of using AI is to iterate quickly. Tweak a word, add a descriptor, remove another, and generate again. Keep doing it until you hit that "aha!" moment. This is a massive advantage over digging through traditional sound libraries—you're not just finding a sound, you're crafting it.

For a library to feel truly dynamic in-game, you need variations. A character doesn't make the exact same footstep sound every single time. So, don't just create one. Generate a whole set:

Footstep_Gravel_Walk_01Footstep_Gravel_Walk_02Footstep_Gravel_Run_01Footstep_Gravel_Scuff_01A tool like SFX Engine is built for this kind of creative back-and-forth. You just type in what you want, hit generate, and see what you get. It makes experimenting fast and easy.

This same process applies to every sound you've outlined in your plan, whether it's the hum of a sci-fi weapon or the satisfying click of a menu button. If you're looking for more inspiration, see how a free AI audio generator can get your creative wheels turning.

By working through your asset list systematically—generating, refining, and creating variations—you'll end up with a library that is cohesive, rich, and uniquely yours.

So, you’ve just spent a day generating a ton of incredible, custom sounds. The creative high is real. But then Monday morning hits, and you’re staring at a folder overflowing with files named new_sound_final.wav. Chaos. A fantastic game sound effects library is worthless if you can't find what you need when you need it.

This is where building a smart, scalable pipeline saves the day. It’s less about rigid rules and more about creating a common-sense framework that keeps your assets organized. Whether you're a solo dev or part of a big audio team, a good system ensures everything is easy to find, understand, and drop right into the game.

Your first line of defense is a logical folder hierarchy. Seriously, don't just dump everything into one giant "SFX" folder—you'll regret it later. Instead, think about how the sounds function within the game itself and build your categories around that.

This approach is super intuitive and makes browsing for the right asset a breeze. Here’s a simple structure that I’ve leaned on for multiple projects, and it's never let me down:

A setup like this immediately clarifies where a new sound should go and where to look for something you made last month. It’s a small bit of upfront work that pays off massively in the long run.

With your folders sorted, it's time to get specific with file names. A great name gives you context at a glance, saving you from having to audition dozens of generic files.

Sound_Final_01.wav tells you absolutely nothing. A much more useful name would be:

SFX_Character_Footstep_Gravel_Run_01.wav

Instantly, you know it’s a sound effect (SFX) for a character’s footstep on gravel while running, and it's the first take. It’s searchable, it's clear, and it makes sense to anyone on the team. For a deeper dive, our guide on how to create sounds breaks this down even further.

Beyond the file name, metadata tagging is where you can really level up your organization. Most modern game engines and digital audio workstations (DAWs) let you embed tags directly into your audio files. Use them!

Tag your assets with useful descriptors like

loopable,one-shot,interior, orsci-fi. This simple habit makes your library instantly filterable inside Unity or Unreal, saving your team from endless manual searching.

As you generate more and more assets, keeping the entire production pipeline efficient becomes crucial. Learning from experts about mastering AI workflow automation tools can provide some fantastic ideas for streamlining these processes.

Putting these pieces together—a clean folder structure, descriptive names, and smart tags—is what turns a messy collection of .wav files into a professional, searchable, and genuinely useful audio library.

Generating a killer sound is a great start, but it's only half the battle. The real magic happens when you bring that sound into the game engine and make it live in the world. This is where you move beyond just having a folder of cool noises and start crafting a truly immersive audio experience.

If you just drag and drop your new audio files directly into the game, you’re leaving a ton of potential on the table. The goal is to make your sounds feel like they genuinely belong in the environment, not like they’re just slapped on top. This is where a sound designer’s skill truly comes to life—shaping how a sound is perceived based on where the player is and what’s happening around them.

Think of post-processing as the final seasoning that makes your raw audio fit perfectly into the game's acoustic space. You don't need a doctorate in audio engineering to make a massive difference. Honestly, mastering a few core tools will get you 90% of the way there.

Three of the most powerful tools you'll find right inside engines like Unity or Unreal are:

The industry is clearly taking this more seriously. The market for sound effects software—both for creation and in-engine implementation—is projected to double from USD 200 million to USD 400 million by 2033. That's a huge signal that games are demanding more professional and nuanced audio design. You can dig into the numbers yourself in this comprehensive report.

Alright, let's get out of the theory and into some real-world examples. How do these tools actually work on the ground?

Picture this: your player is creeping down a hallway and stops outside a closed wooden door. On the other side, an enemy is reloading their rifle. If you just play the raw "weapon reload" sound, it’ll feel completely wrong—like it’s happening right next to the player's ear.

Pro Tip: The trick here is to use a low-pass filter, which is a type of EQ. In your game engine, you can apply this filter to the reload sound whenever a solid object (like a door) is between the sound source and the player. The filter cuts off the high frequencies, instantly giving it that muffled, "behind a barrier" quality.

Now, let's take that same player and have them step out of the hallway into a massive, dripping cavern. Their footsteps can't sound the same. They need to reflect the new space.

Suddenly, every footstep has a long, echoing tail that sells the immense scale and emptiness of the chamber. It’s these small, context-aware tweaks that elevate your sound design from merely functional to genuinely immersive. By thinking about how sounds react to the world, you turn your library from a simple collection of assets into a living, breathing part of the player’s journey.

Jumping into AI for sound design always sparks a few good questions. I hear them all the time from developers and sound artists alike who are trying to figure out where this new tech fits into their process. Let's break down some of the most common ones so you can feel confident about adopting this workflow.

This is probably the biggest question on everyone's mind. Can a sound born from a text prompt truly stand up to something captured with high-end microphones in the real world? The answer isn't a simple yes or no—it's about understanding how to play to AI's strengths.

AI is fantastic at generating a massive variety of clean, specific effects. Think UI clicks, magical spells, or even actions that are just plain impossible to record. It's not necessarily meant to replace the nuanced, one-of-a-kind field recording of a rare bird call. Instead, think of it as an incredibly powerful partner to traditional methods.

My advice? Use AI to build the bulk of your game sound effects library. Then, save your budget and on-location time for those few signature sounds that absolutely demand a live recording. This hybrid approach gives you the best of both worlds: a huge, custom library and a few hero assets.

There's a common misconception that using AI means handing over the creative reins. From my experience, it's quite the opposite. Modern AI sound tools give you an astonishing amount of control, letting you steer the final result with precision.

Your main lever of control is the text prompt itself. The more descriptive you get, the more you can guide the AI. For instance, you can go from a vague "door opens" to "a heavy, rusted metal vault door creaks open slowly in a large, echoing cavern." The difference in the output is night and day.

This is a truly creative process where you can:

You're not just pressing a button and hoping for the best. You're iterating and refining, dialing in the exact texture and feeling you need, just like any other aspect of design.

I get it—the last thing anyone wants is another complicated step in their pipeline. The good news is that integrating AI-generated sounds is incredibly simple and probably fits right into your existing workflow.

AI sound tools like SFX Engine export files in standard formats like .wav or .mp3. Once you've generated the perfect sound and named it according to your project's conventions, you just drop it into Unity or Unreal exactly like you would with any other audio file.

Honestly, the workflow is identical to pulling from a pre-made sound library. The only real difference is that instead of settling for a sound that's "close enough," you've created the perfect asset from the ground up in seconds.

Ready to stop searching and start creating? With SFX Engine, you can generate endless, unique, and royalty-free sounds for your game simply by describing them. Try it for free and build your custom sound library today. Start Generating at SFX Engine.