November 12, 2025 · Kuba Rogut

Great sound design is what separates a good game from an unforgettable one. It's the art of crafting and placing audio to completely shape how someone plays, giving them critical feedback, emotional nudges, and a real sense of being in the world. It’s not just noise in the background; great audio is an active player in the game.

Sound is easily one of the most powerful—and most underrated—parts of making a game. It gets under your skin, working on a subconscious level to guide you, warn you of what's coming, and celebrate your wins, all without a single word on the screen.

Think about it. That satisfying clink when you snag a rare item, the tense music that builds right before a boss fight, or the faint sound of footsteps that tells you an enemy is just around the corner. These aren't just fancy extras; they are fundamental parts of the game's mechanics.

This guide is all about getting your hands dirty. We're going to move past the theory and walk through a complete, practical tutorial on creating, tweaking, and integrating sound effects. We'll be using SFX Engine as our main tool, so you can follow along with real workflows you can use in your own projects.

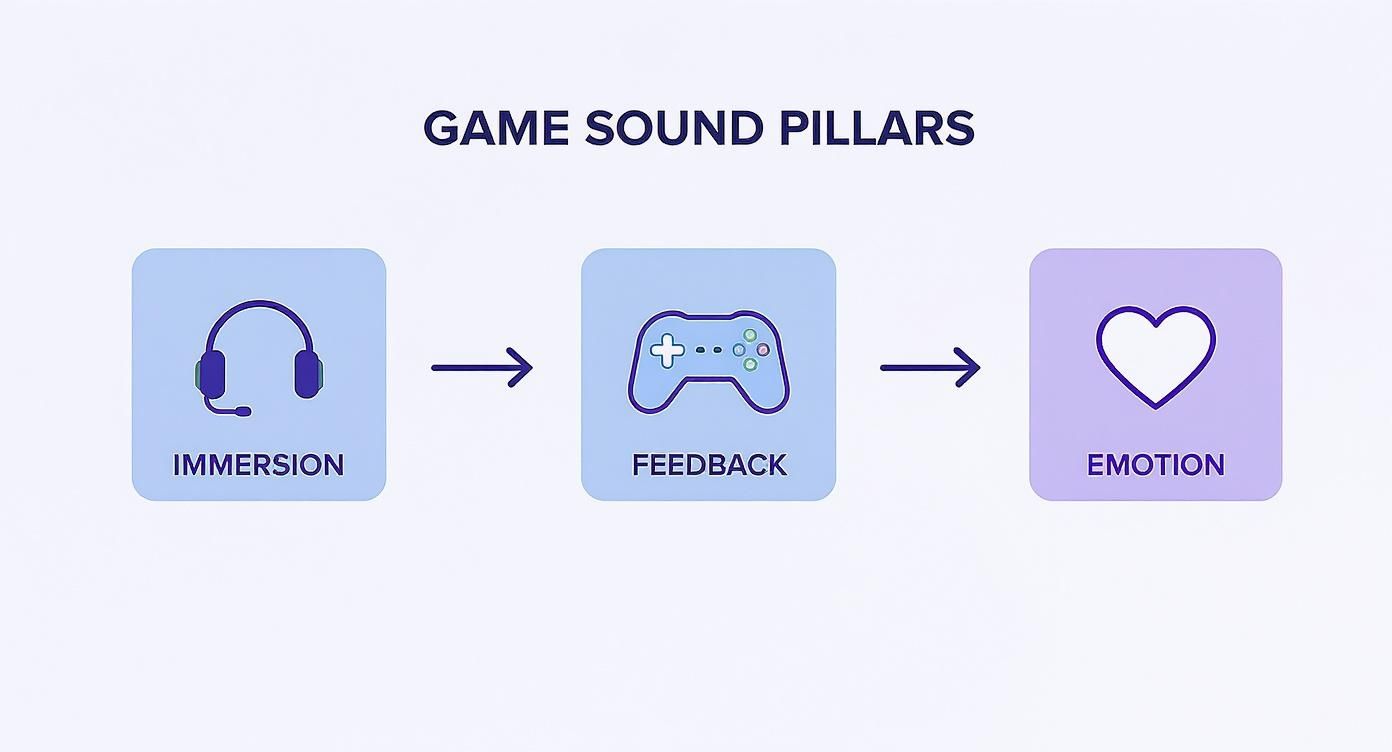

When you boil it down, game audio really serves three main purposes. They all work together to create a tight, compelling experience. If you can get these right, you're well on your way to mastering the craft.

Before we dive deeper, let's lay out these core functions. Think of them as the bedrock of everything we'll be building.

| Pillar | Function | Example in Gameplay |

|---|---|---|

| Immersion | Building a believable and tangible world. | The sound of wind rustling through trees in an open-world RPG, or the metallic echo of footsteps in an abandoned spaceship. |

| Feedback | Providing instant, intuitive confirmation of actions. | The crisp thwack of landing a punch, a dull thud for a blocked attack, or a cheerful chime when solving a puzzle. |

| Emotion | Directly influencing the player's feelings and mood. | A sad piano melody during a character's loss, or a high-energy, percussive track during a frantic escape sequence. |

Nailing these three pillars is what makes the game world feel truly alive and responsive to the player's every move.

Sound is half the experience in a game. It's the invisible layer that dictates pace, builds tension, and connects the player to the digital world on an emotional level. Without it, even the most visually stunning game feels hollow.

The role of sound design has exploded right alongside the gaming industry. As games have become more cinematic and complex, the need for high-quality, interactive audio has gone through the roof. This isn't just about art, either; it's a huge economic force.

The global game sound design market was valued at around USD 2.1 billion in 2023 and is on track to hit USD 4.3 billion by 2032.

This massive growth is powered by a gaming industry that now pulls in three times the revenue of the entire global film and music industries combined. The message is crystal clear: investing in quality audio isn't just a nice-to-have anymore, it's a must-have to compete. For a more detailed look at this, check out our guide on the role of sound in game development.

Every great game has a distinct feel, and a huge part of that comes from its sound. Before you even think about generating a single whoosh or bang, your most important tool is the Game Design Document (GDD). Think of it as your treasure map—it details every character, environment, and interaction that needs its own audio signature.

Tearing down the GDD is how you build a complete audio asset list. This isn't just a to-do list; it's the blueprint for your entire soundscape. You'll pinpoint every sound you need, from the quiet rustle of a character's clothing to the massive roar of a dragon. This process also forces you to define a cohesive audio style that actually fits the game's art and story.

With your list in hand, you'll hit a fork in the road: do you use pre-made sound libraries, or do you create everything from scratch? In my experience, both paths have their place.

Honestly, a hybrid approach usually works best. Use libraries for common background elements and focus your custom work on the signature sounds that define the player's experience—like a hero's main weapon or a crucial magic spell. If you're just starting, it's worth exploring a well-curated game sound effects library just to see what a professional collection looks like.

The whole point is to build a palette that supports the core pillars of game audio, from pulling the player into the world to giving them critical feedback.

As you can see, every sound you create needs a purpose, whether it's for immersion, gameplay cues, or just making the player feel something.

Let's make this real. We'll build a unique "magical projectile" sound from scratch with SFX Engine. This is a classic game asset and a perfect way to demonstrate layering—a fundamental sound design technique. A single sound rarely has enough impact; real complexity comes from blending distinct elements together.

Our magic missile will have three main layers:

In SFX Engine, you can generate each piece with simple text prompts. You could start with "Fast whoosh with a high-pitched tail" for the launch, then "Sizzling magical energy crackle" for the travel, and finally "Heavy magical impact with a crystalline shatter" for the impact. This lets you iterate incredibly quickly until each layer feels just right.

Pro Tip: When you're layering sounds, always think about the frequency spectrum. A great sound effect has a balanced mix of low, mid, and high frequencies. For our projectile, the impact's "thump" provides the low end, the "whoosh" covers the mids, and the "crackle" adds that high-frequency sizzle.

Once you have your three layers, the last step is to pull them into a Digital Audio Workstation (DAW) to mix them together. This is where you transform the separate parts into a single, cohesive asset. If you're looking for a powerful and free tool, Audacity is a fantastic place to start for processing your audio.

Inside your DAW, you'll align the layers on the timeline so the timing feels natural. Then, you can use a few key effects to make them gel.

By following this simple workflow—deconstruct, source, layer, and polish—you can efficiently build a rich and unique sound palette for any game you're working on.

So, you’ve crafted the perfect sound effect. That's a great start, but it’s only half the job. A sound file sitting in a folder is just potential; it doesn't truly come alive until it’s woven into the fabric of the game world itself. This is where we bridge the gap between your audio workstation and the game engine, turning static files into dynamic, responsive experiences.

This implementation phase is where your audio stops being a simple asset and becomes an integral part of the gameplay. It's about taking your carefully designed sounds, setting them up as audio events, and then wiring them up to triggers that fire based on what’s happening on screen.

Let's go back to that "magical projectile" sound we put together. Inside the game engine, this isn't just a single .wav file. We treat it as an audio event. This event might contain our layered sound, but it also holds the logic for how that sound should behave.

The most straightforward implementation is to hook this event directly to a player action. A programmer would add a line of code that essentially says, "When the player hits the cast button, play the 'MagicalProjectile_Launch' event." The moment that button is pressed, the engine finds our sound and fires it off. It's simple, direct, and gives the player immediate, satisfying feedback.

Of course, getting this right often means collaboration. If you're staffing up a project, finding the right technical talent is key. This guide to hiring Unity developers for game studios is a solid resource, especially given how popular Unity is for indie and AA game development.

Just triggering one sound for one action is a good starting point, but professional sound design for games demands more nuance. The real goal is to build systems that adapt and vary, keeping the soundscape from feeling repetitive or fake. This is where dedicated audio middleware like FMOD or Wwise shines, though modern engines like Unreal and Unity have powerful built-in tools as well.

Think about footsteps. A player might hear them thousands of times in a single session. If it’s the exact same sound every time, it quickly becomes an annoying, artificial distraction. But if it varies slightly in pitch, volume, and the specific sample played, it fades into the background, becoming an invisible part of the world's fabric.

Let's break down a couple of common but powerful interactive audio systems.

Picture your character sprinting across a grassy field, clattering onto a gravel path, and then splashing through a puddle. Each surface should sound distinct. The brute-force way is to have a programmer write code to check the surface type and call a different sound file. A much cleaner, more scalable method is to build a dynamic system.

Here’s a typical workflow:

This approach creates a seamless and believable experience right out of the box. To take it a step further, you can add slight, randomized variations to the pitch and volume of each footstep, making it almost impossible for any two steps to sound identical.

Music is where implementation truly becomes an art. Instead of just looping one long track, an adaptive score reacts to what’s happening in the game. The classic example is shifting between music for exploration and combat.

The transition is everything. You can't just cut one track and start the next; that's jarring and pulls the player out of the experience. Instead, you design smooth, seamless transitions. For instance, when combat kicks off, the system might keep the string pad from the exploration track playing while crossfading in the combat percussion and bass. This makes the shift feel deliberate and emotionally connected to the action on screen.

This field is always pushing forward. The game sound design market is in the middle of a major shift, with AI, spatial audio, and new workflows changing how we work. We're seeing wider adoption of technologies like Dolby Atmos for immersive sound, AI-powered tools that help us prototype faster, and a growing need for audio tailored specifically for VR and AR experiences.

Getting your sounds into the game engine is a huge milestone, but now the real artistry begins: mixing. This is where you take a jumble of individual audio files and sculpt them into a cohesive, powerful soundscape that breathes life into the gameplay. It’s so much more than fiddling with volume knobs—it’s about creating clarity, delivering impact, and making sure the player hears exactly what they need to, right when it matters most.

The big challenge with sound design for games is that you're mixing for pure chaos. Unlike a film with a set timeline, a game's audio is in constant flux, reacting to every move the player makes. Your mix has to be dynamic and tough enough to handle anything, from a quiet, tense moment of exploration to an all-out sensory assault.

At any given second, dozens of sounds might be vying for attention. Without a solid hierarchy, you get audio mud. Critical cues get lost, and players get frustrated. I always tell people to think of their mix as a pyramid—the most important sounds always get the top spot.

So, how do you decide what’s important? A great starting point is to categorize your sounds like this:

With this structure in place, that crucial sound of an enemy sneaking up behind the player will slice right through the noise of a massive firefight.

To help you get started, here's a simple table that lays out a typical audio hierarchy. Think of it as a blueprint for ensuring your mix is clean and effective, no matter how wild the on-screen action gets.

| Priority Level | Audio Category | Mixing Goal |

|---|---|---|

| High | Dialogue & Key Player Actions | Ensure 100% clarity. This audio must never be missed. |

| Medium-High | Enemy Actions & Threat Cues | Cut through the mix to alert the player to immediate danger. |

| Medium | General Gameplay & Foley | Provide rich feedback without overwhelming more critical sounds. |

| Low | Ambiance & Background Music | Set the mood and provide a sense of place, ducking when necessary. |

By consciously layering your sounds this way, you guide the player's attention and make the game more intuitive and fair.

A static mix just feels flat and lifeless in a game. The real magic comes from making the audio react to the game world in real-time. We do this with Real-Time Parameter Controls (RTPCs), which are basically links that tie a sound’s properties (like volume, pitch, or filters) to a live game variable.

For instance, you could tie the player’s health stat to a low-pass filter on the master audio bus. As health gets critically low, the RTPC kicks in, muffling everything to simulate dizziness or shock. It’s a powerful bit of feedback that ramps up the tension without a single new visual. A more common example is linking a vehicle's engine pitch to its speed, creating that satisfying and seamless revving sound as you accelerate.

If you want to dive deeper into the technical nuts and bolts of finishing audio, our guide on the audio post-production workflow is a great resource.

A great game mix is a conversation between the game and the player. It anticipates the player's needs, highlights what's important, and gets quiet when it's time to listen. It's an invisible guide that shapes the entire experience.

Sounds don't just hang in thin air; they bounce off walls, get blocked by doors, and echo through canyons. Creating this sense of physical space is a game-changer for immersion, and we primarily achieve it with reverb, occlusion, and obstruction.

These are the details that trick our brains into believing the world is real.

Finally, it's time to master the mix. This isn't about making big creative changes; it's about ensuring your carefully crafted soundscape translates well everywhere. A mix that sounds incredible on your studio monitors might sound like a muddy mess on cheap TV speakers or bass-boosted earbuds. You absolutely have to test on a wide range of devices.

This level of dedication is why experienced audio professionals are so valuable. The 2025 GameSoundCon Game Audio Industry Survey revealed that the average income for game audio pros in the U.S. and Canada hit $155,198—a massive 20% increase from 2023. This really shows how much the industry is recognizing the vital role that great audio plays.

By taking the time to mix, master, and test with care, you’re not just finishing a task; you’re creating an immersive audio experience that will elevate the entire game.

You've nailed the fundamentals of game sound design, which is a huge milestone. But this is really just the starting point. To make your work truly stand out and open up exciting career doors, you need to dive deeper into creating dynamic, living soundscapes that breathe with the game.

Let's get into some of the more forward-thinking techniques and then talk about how to package those skills to build a solid career in this incredibly rewarding field.

One of the most exciting frontiers in game audio right now is procedural audio. Forget playing back pre-recorded files. Here, the game engine itself generates audio on the fly based on rules and real-time game data. A classic example is a car engine. Instead of painstakingly blending a dozen recordings for acceleration, a procedural system synthesizes the engine's roar in real-time, reacting instantly to RPMs, gear shifts, and load.

This approach gives you infinite variation, which is a game-changer for repetitive sounds. Think about footsteps or rainfall—procedural audio ensures no two sounds are ever identical, completely killing the listener fatigue that comes from hearing the same .wav file loop a hundred times. It's a complex beast to set up, but the payoff is a world that feels genuinely alive and responsive.

We've talked about adaptive music, but advanced systems push that idea way further. Imagine a musical score broken down into dozens of individual stems—separate tracks for drums, bass, melody, and atmospheric pads. An audio engine like Wwise can then weave these stems together in real-time, all guided by what’s happening in the game.

A great pro-tip is to make your musical stems different lengths, but always ensure they're cut on a downbeat. This allows them to loop and layer at different intervals while staying perfectly in sync. The result is a score that’s constantly evolving and never sounds repetitive, even when the player is stuck in the same area.

Think bigger than just switching from "explore" to "combat" music. Picture a system that subtly fades in a tense melodic layer when an enemy is hiding just out of sight. Or one that shifts the music into a different key or tempo to mirror a character's emotional breakdown during a cutscene. This is how the score stops being background noise and becomes an active part of the storytelling.

Virtual and Augmented Reality are a whole different ballgame. In VR, spatial audio isn’t just a nice-to-have feature; it’s absolutely essential for immersion and even basic gameplay. Players need to be able to pinpoint a sound's location—above, below, or sneaking up from behind—with dead-on accuracy.

This means you need a rock-solid grasp of binaural audio and the physics of how sound travels in a 3D space. The ultimate goal is to craft a soundscape so convincing that the player's brain just accepts the virtual world as real.

Your creative and technical skills are the foundation, but building a career requires a smart approach. Studios aren't just looking for people who can make cool sounds; they want problem-solvers who get the entire game development process.

Your portfolio is everything. Seriously. Don't just throw a bunch of isolated sound effects on a page and call it a day. You need to show your skills in context.

Talent is great, but technical expertise gets you in the door. Today, proficiency in audio middleware like Wwise and FMOD is pretty much non-negotiable. These tools are the industry standard for implementing complex, interactive audio.

Beyond that, having a basic understanding of scripting (like C# in Unity or Blueprints in Unreal) can make you a huge asset. It means you can prototype and implement your own ideas without having to constantly rely on a programmer. When you combine next-level creative techniques with solid technical know-how, you’re not just starting a career in game audio—you’re setting yourself up to thrive.

Even with the best guide, you're bound to have questions, especially when you're just dipping your toes into game audio. Let's tackle some of the most common ones that pop up.

If you're just starting out, you can get a lot done with a surprisingly small toolkit. At the bare minimum, you'll need a Digital Audio Workstation (DAW) for recording and editing—something like Reaper or Logic Pro works great. You'll also need a solid pair of studio headphones; don't try to mix on gaming headsets or earbuds.

Beyond the basics, you'll need a way to implement your sounds. While you can work directly in game engines like Unity or Unreal, learning dedicated audio middleware like FMOD or Wwise is what the pros do. And for creating sounds quickly, a tool like SFX Engine is a game-changer, letting you generate custom effects without needing a recording booth and a box of props.

A great portfolio needs to show two things: your creative chops and your technical skills. Just dropping a folder of .wav files on someone isn't enough.

A common starting point is doing "redesigns" of game trailers or gameplay clips. You take an existing video, mute it, and replace every single sound with your own creations. This is a fantastic way to showcase your vision and your ability to mix a complex scene.

But here’s the real secret: the most impressive portfolio piece you can make is a small, playable demo. Building something in Unity or Unreal shows recruiters you understand how audio actually works in a game. Show off an adaptive music system, dynamic footstep sounds on different surfaces, or ambient sound zones. That’s what proves you can do the job.

Think of it this way: a game engine’s built-in audio tools are like a basic toolkit. They can play sounds, loop them, and change the volume. They get the job done for simple projects.

Audio middleware like FMOD and Wwise, on the other hand, is the specialized, high-powered machinery. It’s built specifically for creating complex, interactive audio experiences that are just too cumbersome to build from scratch in the engine.

Middleware is where the magic happens for things like:

Ultimately, middleware gives sound designers the power to build deep, immersive soundscapes without having to constantly rely on a programmer. It's the standard workflow in almost every professional studio for a reason.

Ready to create unique, high-quality audio for your next project? With SFX Engine, you can generate custom, royalty-free sound effects instantly from a simple text prompt. Stop searching through libraries and start creating the perfect sound.