An AI sound effect generator is a pretty incredible tool that lets you create totally unique audio clips just by typing out a description. Instead of spending hours digging through stock libraries, you can now generate the exact sound you have in your head—from “a gentle summer breeze rustling through pine trees” to “a futuristic spaceship door hissing open.”

Essentially, this technology becomes your creative partner, translating your ideas directly into sound.

For years, sound designers and creators really only had two options for getting audio: go out and record it themselves (field recording) or buy pre-made sound effects from a library. Both methods work, of course, but they come with their own headaches. Field recording can be expensive and take forever, while stock libraries often force you to settle for a sound that’s close enough but not quite right.

AI sound effect generators completely flip that script.

Think of it less like a search engine for audio and more like an audio artist on call, ready to create anything you can imagine. You give it the concept—the "what," "where," and "how" of the sound—and the AI synthesizes a brand-new audio file from scratch based on its vast training.

This whole approach is a fundamental shift from finding a sound to actively creating one. It gives you the power to produce audio that is perfectly timed and tailored to your visuals or story, something that used to require a massive budget and a dedicated sound team.

Let's quickly summarize why this is such a big deal for creative workflows.

| Benefit | Impact on Creative Workflow |

|---|---|

| Unmatched Speed | Generate custom sound effects in seconds, not hours of searching or recording. |

| Creative Freedom | Finally create those sounds that don't exist in any library. Your imagination is the only limit. |

| Infinite Variation | Need five slightly different footsteps on gravel? Just ask. You can produce endless versions of a sound with ease. |

| Cost-Effectiveness | This technology drastically cuts down the need for expensive recording gear or recurring library subscriptions. |

This isn't just a minor improvement; it's a genuine step forward that makes high-quality sound creation accessible to everyone.

This shift really democratizes the world of audio production. It allows independent filmmakers, game developers, and podcasters to achieve a level of polish that was once way out of their reach.

It's no surprise that the demand for this kind of technology is exploding. The global market for AI voice and sound generation was valued at around USD 3.0 billion and is expected to soar to USD 20.4 billion by 2030. This kind of rapid growth shows just how vital these tools are becoming in modern media production.

Getting a handle on this technology is no longer optional—it's essential for anyone working in creative media. This guide will walk you through everything you need to know, from the basic principles of what is sound design to advanced prompting techniques and practical workflows. Our goal is to give you the confidence to use an AI sound effect generator not just as a tool, but as a core part of your creative process.

It can feel like you’re performing a magic trick. You type a few words, hit enter, and a brand-new, totally original sound appears out of thin air. But what’s happening behind the scenes isn’t magic—it’s a fascinating mix of data, pattern recognition, and some seriously clever algorithms.

Think of it like training a world-class musician who has spent years listening to thousands of different soundscapes. Instead of learning sheet music, this digital musician studies a massive library of audio data. We’re talking about a meticulously organized collection of everything from jungle ambiances and weather patterns to the subtle whir of a machine or the crunch of footsteps on gravel.

The AI listens to all these examples and learns the fundamental physics of sound: pitch, timbre, volume, and timing. More importantly, it learns the relationships between them.

This entire learning process is driven by generative models. These are a type of deep learning system, often a neural network, designed to work a bit like the human brain. By sifting through enormous amounts of audio, the AI starts to understand the "rules" of how sounds are built in the real world.

So, when you type in a text prompt, you’re not just searching a database. You’re giving the AI a creative brief. The model takes the meaning of your words and connects it to the audio patterns it has learned, generating a completely new waveform from scratch that fits your description.

The roots of this technology go back to speech synthesis. Early on, researchers found that creating believable human voices required tons of training data. For example, Google’s Tacotron 2 needed tens of hours of clean audio to sound natural; with only 24 minutes of input, the results were barely understandable.

This really drives home a key point: the quality and variety of the training data are everything. The better the library the AI learns from, the more realistic, nuanced, and creative the final sound will be.

Great AI sound tools don't just stop at the prompt. They give you a set of creative controls—a sound designer's toolkit—to shape and refine the result. These parameters let you make practical adjustments without needing a degree in audio engineering.

Here are a few of the most common controls you’ll find:

Think of these parameters as your director's notes to the AI. Your prompt is the main idea, but these controls help you guide the performance until it’s just right.

Once you grasp these concepts, you shift from being a user to a true collaborator. You're no longer just asking for a sound; you're actively guiding a powerful tool to create precisely what your project demands. To see this in action, check out our guide on how to generate audio from text. Getting comfortable with these fundamentals is the secret to unlocking what these tools can really do.

Alright, let's get down to the fun part. Moving from theory to practice is where the real creativity kicks in, and with AI sound generators, it all starts with the text prompt. Think of it as your command center—a simple sentence that holds the power to create just about any sound you can imagine. Honestly, getting good at writing prompts is the single most important skill you can build to get professional-sounding results.

It helps to see yourself as a director and the AI as your incredibly talented (but very literal) sound artist. A vague instruction like "make a footstep sound" gets you a result, but it'll probably be generic. To get something that feels real and unique, you need to give specific, evocative details that paint a clear picture for the AI.

The best way I've found to write a powerful prompt is to build it layer by layer. Start with a simple idea and keep adding descriptive details. This methodical process helps ensure you're covering all the little things that make a sound believable.

Let’s break down this "building block" method.

First, just name the core subject of your sound. This is the foundation.

Next, throw in some descriptive adjectives. What kind of subject is it? What's it made of?

Now, pick a strong verb for the action. The verb you choose can completely change the feeling of the sound.

Finally, give it some context. Where is this all happening? What’s the environment like?

This step-by-step process takes a generic idea and turns it into a rich, detailed scene that the AI can interpret with much greater accuracy. If you want to go even deeper into the practical side of sound creation, check out our guide on how to make a sound effect.

Seeing this evolution laid out can really help clarify how each layer of detail adds crucial information for the AI. A well-crafted prompt doesn't leave much room for guesswork; it guides the generator right to the audio you have in your head.

Here’s a look at how a prompt can evolve.

Prompt Evolution From Simple to Complex

| Prompt Level | Example Prompt | Expected Sound Output |

|---|---|---|

| Level 1 (Basic) | A door closing. | A generic, simple sound of a door latching, with no specific room or material qualities. |

| Level 2 (Detailed) | A heavy oak door creaking shut. | A more characterful sound with the distinct weight and wooden texture of an oak door. |

| Level 3 (Contextual) | A heavy oak door creaking shut slowly in a large, empty stone hall, echoing slightly. | A fully immersive sound effect with the door's character plus the acoustic properties of a cavernous, reverberant space. |

As you can see, specificity is your best friend here. The more detail you give, the closer the final sound will be to what you originally envisioned.

Never, ever underestimate the impact of your word choices. The difference between "a car driving" and "a vintage muscle car roaring down a wet city street at night" is massive. The first is a sound. The second is a whole scene.

This interface from SFX Engine shows how a simple prompt can be the starting point, with further controls to refine the output.

The key takeaway is that your text prompt is your main creative tool. It gives you the power to define not just the sound itself, but its entire emotional and environmental context.

A great prompt isn't just a description; it's a story. Words like "gentle," "aggressive," "distant," or "muffled" act as emotional cues for the AI, shaping the tone and feel of the final audio.

Think about the feeling you want your sound to create. Is it tense? Peaceful? Exciting? Use emotional language to get there.

By focusing on this building-block approach and using powerful, descriptive language, you move beyond just generating sounds. You start to truly design them, giving you an incredible level of creative control over your projects.

So, you’ve got your hands on an AI sound effect generator. Now what? The real magic happens when you move beyond just cranking out one-off sounds and start building a repeatable process that fits right into your projects. It’s not about throwing out your old methods; it's about creating a powerful hybrid workflow where AI and traditional sound design work together.

Let's walk through a real-world scenario. Imagine you’re a video editor building the entire soundscape for a short film. The scene is a rainy, futuristic city at night. Your job is to make it feel alive, and you want to use a mix of AI-generated assets and your trusty sound library to do it.

Everything starts with a concept. Before you type a single word into the prompt box, take a moment to break the scene down into its core audio ingredients. For our sci-fi city, that list might look something like this: the sound of the rain itself, the low hum of flying cars, footsteps splashing on wet pavement, and the electric crackle of a neon sign.

Once you have that mental map, you can start crafting your first prompts. Be specific, but don't be afraid to leave a little room for the AI to get creative and surprise you.

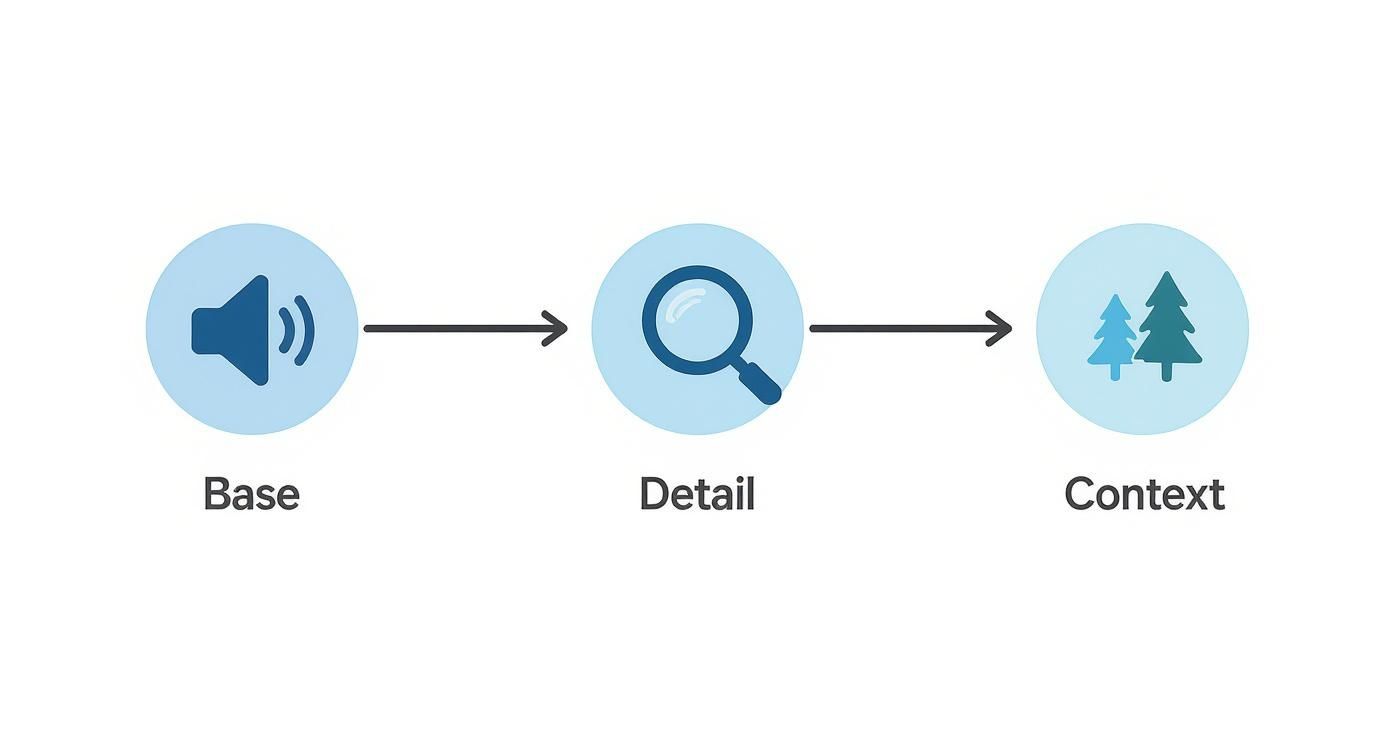

This process—starting with the basic sound, adding detail, and then giving it context—is the fastest way to get useful results.

Think of it like building with blocks. You start with the main sound, refine it with details, and then place it into the broader environment.

Let's be honest: no AI-generated sound is going to be perfect right out of the gate. This next step is absolutely critical. You need to listen, pick the best takes, and get them ready for your project.

My advice? Don't just generate one version of each sound. Aim for 5-10 variations. This gives you a palette of options to work with, almost like a film director reviewing multiple takes to find the perfect performance.

Once you’ve found your favorites, it's time to pull them into your Digital Audio Workstation (DAW). This is where your skills as a sound artist really come into play.

"AI gives you the raw marble, but it's your job as the artist to sculpt it. Post-processing is where you add your signature and make the sound truly your own."

Inside your DAW, you’ll use familiar techniques to shape and polish the AI effects:

The final stage is all about composition. Your new AI-generated sounds shouldn't live on an island. The goal is to weave them together with each other and with assets from your existing sound libraries.

For our scene, maybe that AI-generated rain provides the perfect atmospheric bed. But for real dramatic punch, you might layer in a powerful thunderclap you already have in your library. By blending the two, you create a soundscape that is richer, more dynamic, and ultimately more believable than either could be on its own.

Think of AI as the tool that fills the gaps in your library, giving you that one-of-a-kind sound that ties the whole scene together.

Once you start pulling an AI sound effect generator into your workflow, two big questions inevitably pop up: Is the quality good enough for my project? And, maybe more importantly, can I legally use this stuff? Getting clear on these points right away is crucial, especially if you’re working on commercial projects.

Let’s tackle quality first. It’s no secret that not all AI-generated sounds are keepers. Your role shifts from creator to curator, and you need a critical ear to make sure the output holds up to professional standards.

Listen for those tell-tale digital artifacts—the subtle clicks, pops, or that weird, watery texture that screams "this was made by a machine." You also have to judge its realism. Does that "heavy rain" actually sound like a downpour, or is it just a thin, synthetic hiss?

This is where it gets a little complicated, but it's the most critical piece of the puzzle for any creator. When you generate a sound effect, who actually owns it? The answer lives in the terms of service for whatever tool you're using.

Thankfully, most reputable platforms like SFX Engine lay this out pretty clearly. When you create a sound, they usually grant you a royalty-free license. This is your golden ticket—it means you can use that sound in your films, games, or podcasts without having to pay extra fees every time it's used.

But "royalty-free" isn't the same as owning the copyright. It just means you have legal permission to use it. To really get a handle on the legal side of AI content, it's worth having a foundational understanding of what is intellectual property protection.

Pro Tip: Always read the fine print. Before you commit to a platform, hunt down its licensing agreement. A few minutes of reading can save you from a world of legal trouble later on.

Keep an eye out for these key phrases that spell out your rights:

As AI sound tools have exploded in popularity, the industry has been forced to focus on creating clear, simple licensing. North America is currently the biggest player in the AI sound and voice generator market, making up about 37.9% of the global revenue in 2023. You can see more about these market trends on Market.us.

This boom, fueled mostly by the entertainment and gaming industries, puts a lot of pressure on platforms to offer legal terms that creators can actually understand and trust.

At the end of the day, a great AI sound tool gives you two things: high-quality audio and peace of mind. Your job is to pick a service that offers a transparent licensing model to protect your work. By carefully checking both the audio quality and the legal paperwork, you can make AI a powerful and worry-free part of your creative process.

An AI sound effect generator is a bit like a chameleon—its real value comes from how it adapts to its environment. The way a filmmaker uses one to craft a believable world is completely different from how a music producer might design a new synth patch.

To really get the most out of these tools, you have to think about the specific demands of your craft.

Whether you're scoring a film, building a game, or producing a podcast, each field needs a unique mindset and approach to prompting. Let's dig into how different creators can put these generators to work.

For anyone in film or video, AI sound tools are a massive leap forward for Foley and environmental sound design. You're no longer stuck with the same old stock sound libraries; you can create audio that's perfectly synced and emotionally matched to what's happening on screen.

Of course, sound is just one piece of the puzzle. The same generative principles are now being applied across creative workflows, with a growing number of AI video creation tools helping creators on the visual side.

Gaming is all about interactivity and variation, which is where AI sound generation really comes alive. Hearing the same sword swoosh or door creak over and over again can quickly pull a player out of the experience.

For musicians, an AI sound generator is an incredible way to break free from the limitations of sample packs. It’s a tool for creating textures and sounds that are truly yours.

Think of it as a limitless synthesizer. Instead of tweaking knobs, you're using words to sculpt unheard-of sounds that can define your track's unique character and atmosphere.

A polished soundscape can make the difference between a good podcast and a great one. AI generators make it incredibly easy to create a professional sonic identity that keeps listeners hooked.

Anytime you dive into a new creative tool, you’re bound to have some questions. AI sound effect generators are no different. They're incredibly powerful, but getting a handle on what they can (and can't) do is the secret to getting great results.

Let's clear up some of the most common questions people have.

Absolutely, though maybe not in the way you’d expect. While these tools are masters at creating specific effects like a dragon’s roar or a futuristic spaceship door, they also shine when it comes to musical textures. Think abstract atmospheric drones, weird rhythmic loops, and one-of-a-kind sonic layers.

They won't write a full symphony for you. But for music producers, they are a goldmine for creating unique source material you can twist, chop, and layer inside your favorite DAW.

I like to think of it as a tool for creating custom sonic ingredients, not the final meal. Prompts like "a haunting bell tone echoing in a crystal cave" or "gritty, rhythmic static from a broken radio" can give you starting points you'd never find in a standard sample pack.

The tech is amazing, but it's not perfect... yet. The main hurdles you'll run into are consistency, especially when trying to generate longer files, and the occasional weird digital artifact that needs a little editing love.

Also, the AI can sometimes get tripped up by really abstract emotional prompts. Describing a complex feeling like "melancholy nostalgia" is a lot harder for it to interpret than "heavy rain on a tin roof." You have to get very descriptive with your prompts.

The good news? The pace of improvement is frankly staggering. These limitations are shrinking with every new model update.

Pricing is all over the map, which is actually a good thing—it means there's probably a plan that fits your needs. Most tools fall into a few common buckets.

My advice? Figure out how often you’ll really be generating sounds, and then find the plan that matches that workflow without breaking the bank.

Ready to stop searching through libraries and start creating the exact sound you hear in your head? With SFX Engine, you can generate custom, royalty-free sound effects in seconds. Try it for free today and hear the difference for yourself.